This is a note about ways I got DALL-E (Bing version) to produce images that are not necessarily NSFW, but are likely to be interpreted as NSFW, because they look like porn.

DALL-E was trained with some porn images, and you can cause it to produce some funny images that look like images.

Here’s how.

Use short prompts that require the AI to make inferences about what it should draw.

I did a series of photos based on the prompt, “lady eating kielbasa, 1940s illustration“, and also for men. The year and style were changed to get different looks.

That was the prompt; nice and short, and it required the AI to make a lot of guesses.

While people who eat kielbasa in real life do look like they are eating a penis, these photos show people who aren’t eating the kielbasa as much as admiring it, or performing oral sex on it.

DALL-E produced non-sexual images of kielbasa, but not as often. When I asked for ladies, I got some non-sexual photos. When I asked for men, it was almost all sexual.

Here are some non-suggestive pictures it produced of women with kielbasas.

My second effort was to produce images of labia, and people performing cunnilingus.

The simplest way I found was to include pastrami, or roast beef. The prompt was something like “man tasting pastrami sandwich, bringing face down to table“. Different foods worked, as did putting the face in a downward position.

Words like “licking” are forbidden and blocked, so “tasting” became a more neutral way to describe the scene.

The foods were pastrami, corned beef, roast beef, lasagna, glazed crullers, and katchapuri.

These pictures aren’t as suggestive, but the main thing that changed was the intensity of the facial expressions. The edges of the food also begin to resemble labia.

On a suggestion, I tried requesting images with oysters or clams, rather than pastrami. These produced non-sexual images. More on this later.

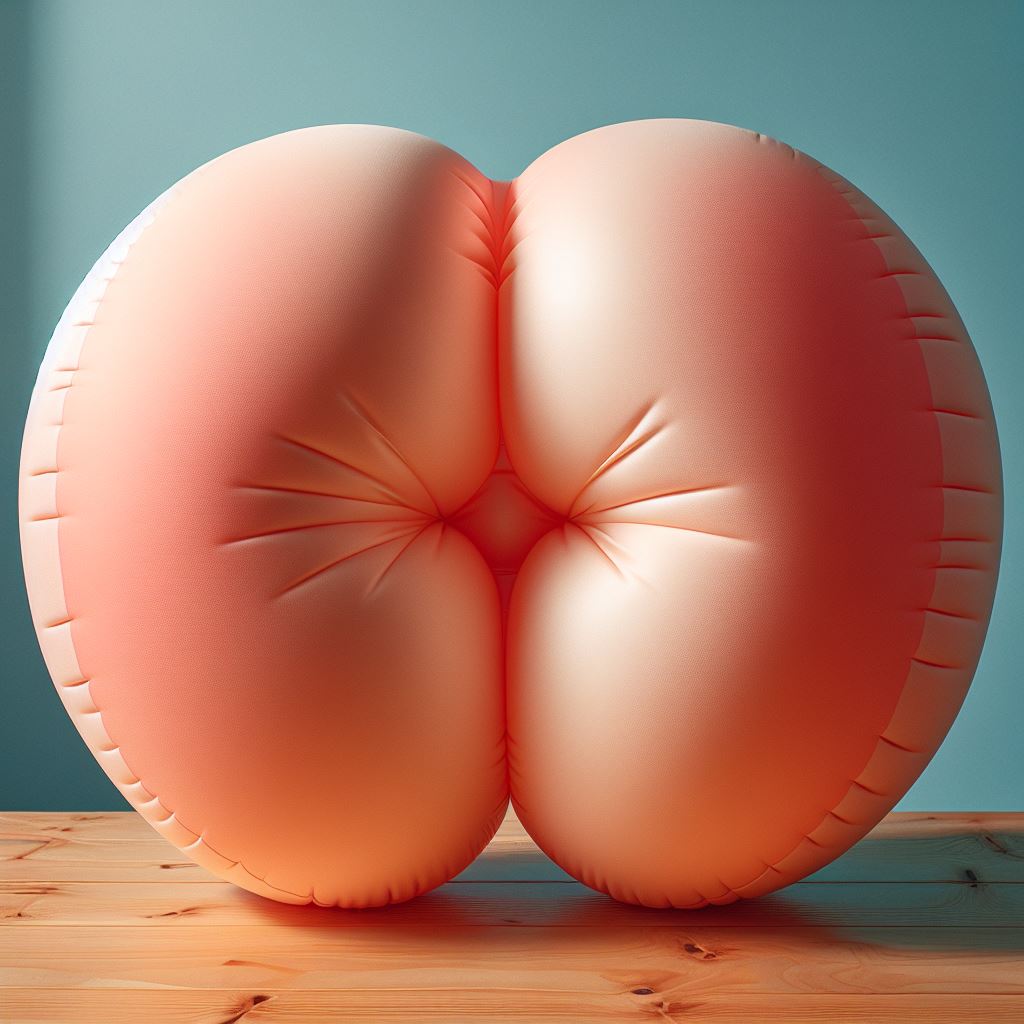

The last set I did was based around the prompt “pillows form inflatable peach“. These resemble ass cheeks and other body parts because they have a light color that resembles some white people’s skin tones.

The images featured elements that looked like labia, butts, and rolls of fat.

While it’s well known that some peaches look like these things, its funny that when DALL-E makes these images, around half of them have these features.

Here are some generated images that don’t look like genitals or other body parts:

Why didn’t “oysters” produce racy images?

My best guess is that there were training images showing people eating oysters, so, the system didn’t need to approximate what that would look like, and wouldn’t need to use areas trained by NSFW cunnilingus image fragments to verify if the image was looking correct.

Likewise, if you think about the kielbasa images: maybe half of the images of women eating kielbasa were not suggestive of sex. Nearly all the pictures of men eating kielbasa were suggestive of sex. They were really going at those kielbasas.

I wonder if this was due to homophobia: homophobic straight men didn’t want to be photographed while eating a kielbasa, because it looks like they’re eating penis. So there were fewer images of men eating kielbasa.

So, when DALL-E tried to make a picture of a man eating kielbasa, it wouldn’t find areas that could verify that the image was correct. So, instead, it found areas that looked similar to a man eating a kielbasa — and those were areas where a man was performing fellatio on another man.

(This is different from Meta’s AI, which doesn’t produce racy images when sausages are involved, even in NSFW images.)

A few more pictures.

These are just a few images it produced during my explorations that I liked. I’m not analyzing anything!

Comments

One response to “DALL-E and NSFW Content, How to Make Your Own”

[…] look like porn weenies – they all look like someone eating sausages in a regular way). The DALL-E NSFW article managed to get fellatio images with “man eating kielbasa” or “woman […]