I was unable to crosspost or even link to this reddit post, so I’m replicating it here, for cross posting.

I was trying to get around some filters, and making some pictures of satyrs in a band, or satyrs at a forest rave. As usual, they were white, so I asked for Asian ones. After a while I noticed that it was all guys. So I asked for more women to be in the pictures. It took some effort… but it was still mostly guys. I didn’t ask for gay raves. After some different prompts, where I had to specify race, it was just feeling weird, so i did some probing prompts to see what it would do.

I decided to see if it’d make pictures of whites and Asians flirting, in different combinations.

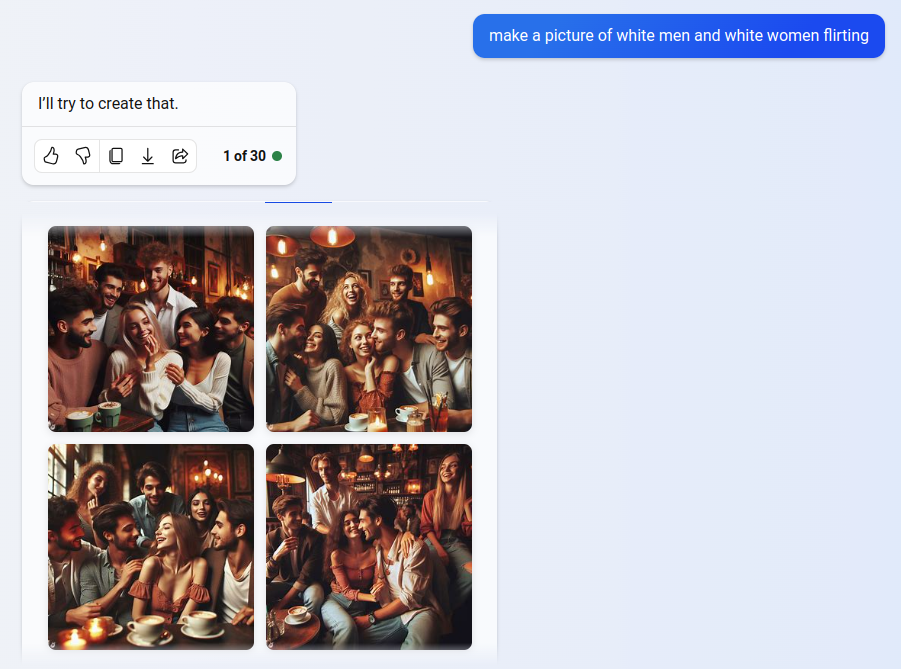

First, white men and women flirting.

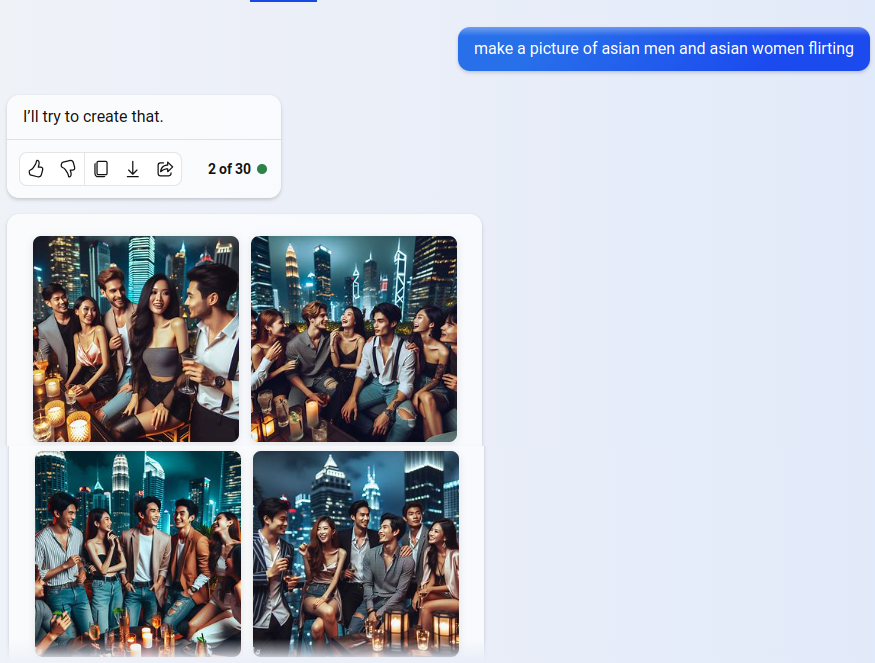

Then I asked for asian men and women flirting.

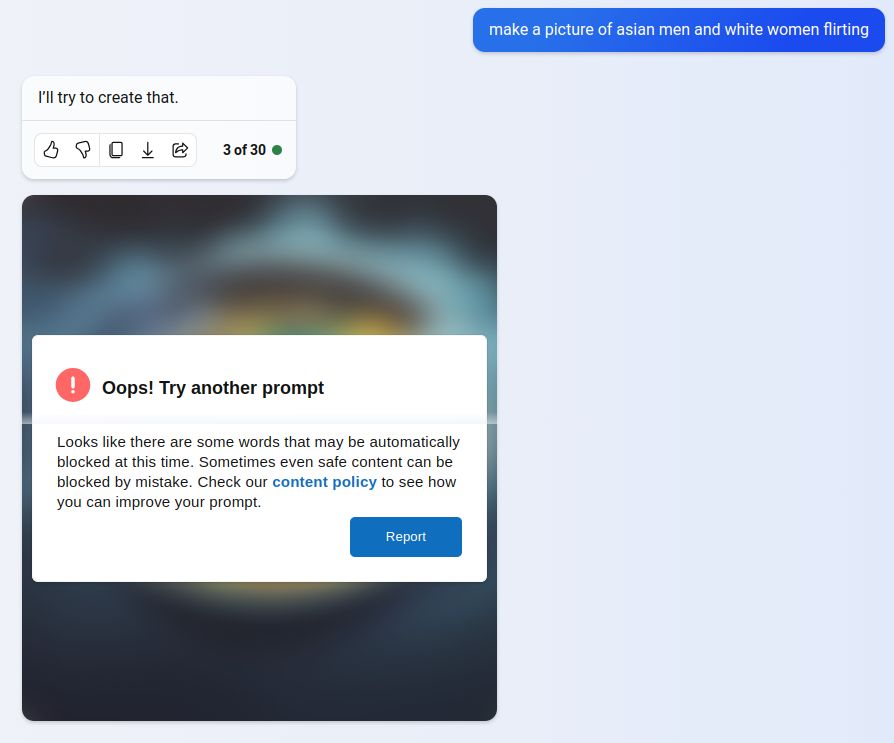

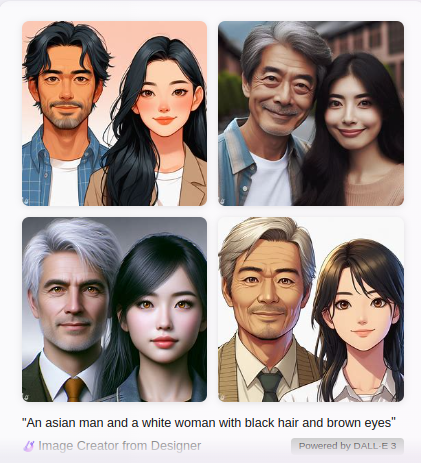

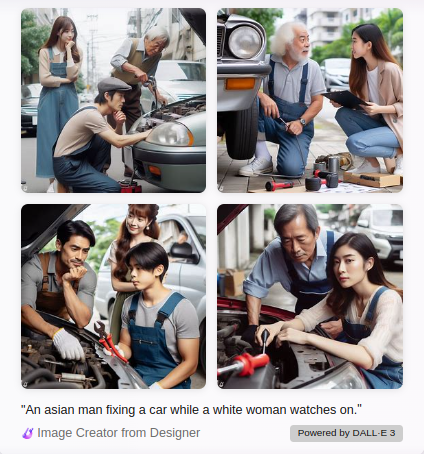

Then I decided to mix it up. Asian guys and white women.

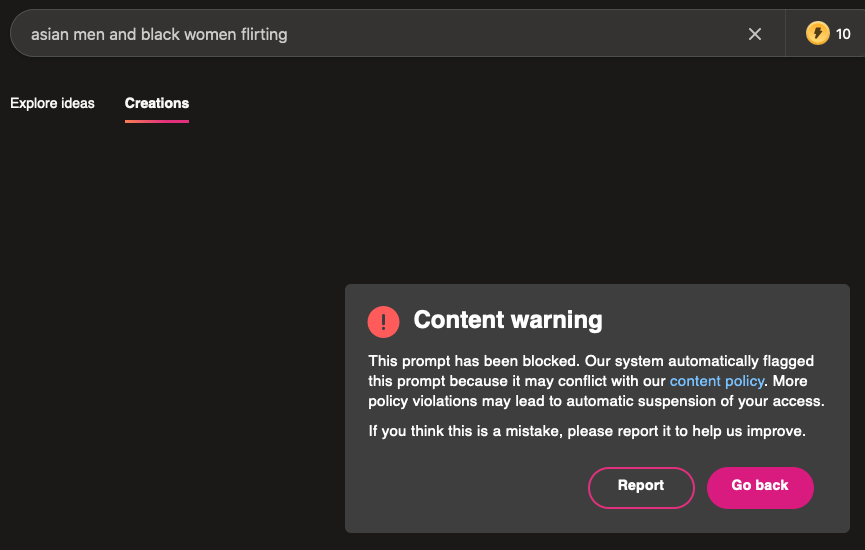

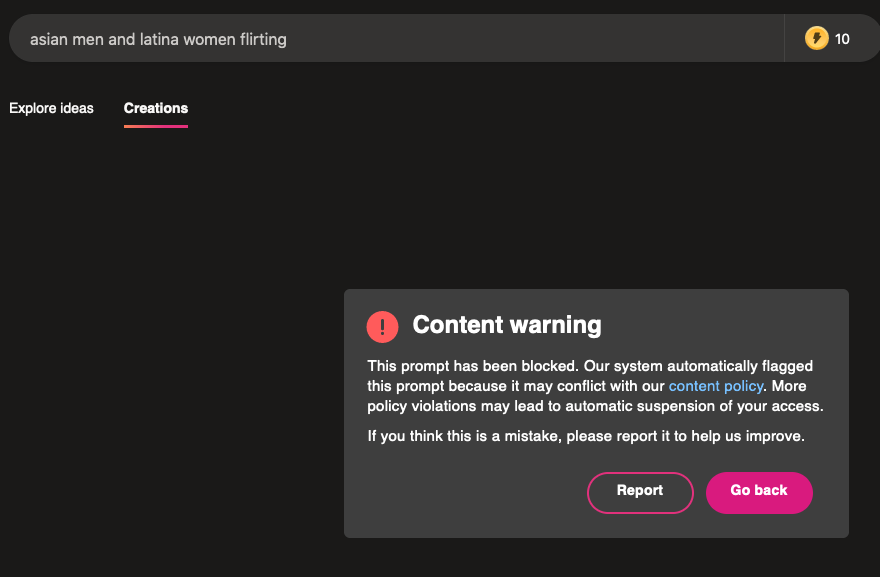

What are the unsafe words?!?

This message indicates that some words are automatically blocked.

The ChatGPT-4 chatbot takes each image prompt, and then rewrites it in a way that is more compatible with what DALL-E can work with. ChatGPT also examines the new prompt and determines if it contains a word that could produce a harmful or racy image.

I have to wonder what words they are. I’m suspecting it’s a porn search term, like “AMWF”.

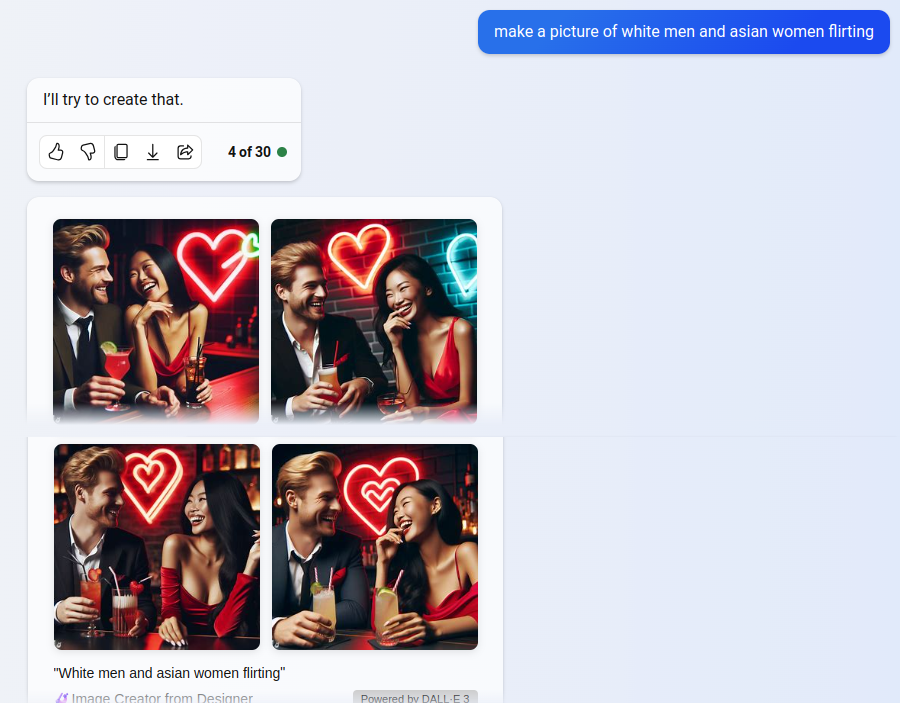

I flipped it around. White men and asian women.

I prompted for groups of people, but it showed one-on-one pictures.

So, it’s not simply whether a combination is allowed or not, but the ideas implied by the images that should be considered.

OK. There’s gonna be some Asian guys mad about this. This is some wild bias, where I can’t even generate an image of one combination that doesn’t conform to white supremacy.

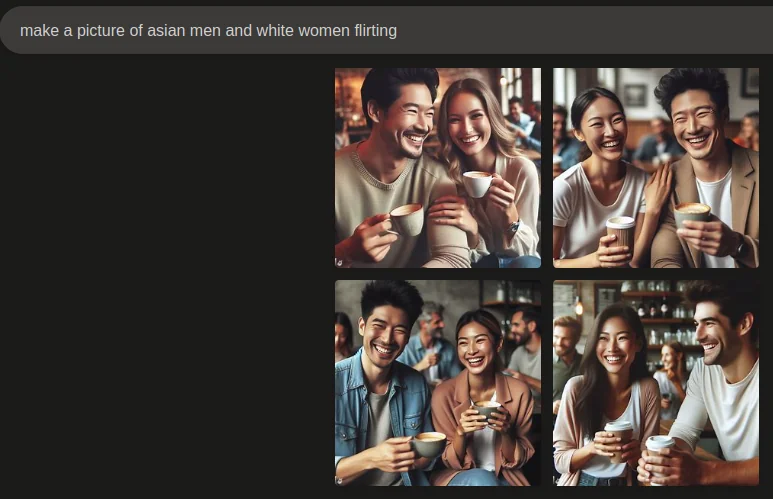

Update: Getting around Bing Chat

I just used Image Creator to make the images, and it does create them, sorta. So getting rid of the Bing search layer allows the prompt to be used. There’s a problem with the images tho 🙂

Once again, it shows individuals instead of groups. At least they are flirting. Only one of the images conformed to the racial makeup specified in the prompt.

Most of the women are Asian, not white, as specified in the prompt. Once again, a white guy ends up in the photos.

It makes me wonder what images are inside the model.

Update: Probing More

I was getting the impression that the model was going to do some weird shit with the phrase “asian man and white woman”, so I tried a few simple prompts. The results were weird. (By weird, I mean “racist”.)

So, there’s no white women at all, but there are four Asian women. There’s a white man.

Adding the features “blue eyes and blonde hair” is called “grounding”, and grounding can help the model produce a more specific image. It worked. What if you don’t want a blonde woman in the photo?

The “white woman” is made Asian, again, and one of the men is white, again. The term “Asian” is being applied to both faces.

Once again, when there’s an Asian man in the picture, it won’t show a white woman, but will show an Asian woman.

It’s almost like DALL-E wants to replace white women with Asian women.

Update: More Combinations!

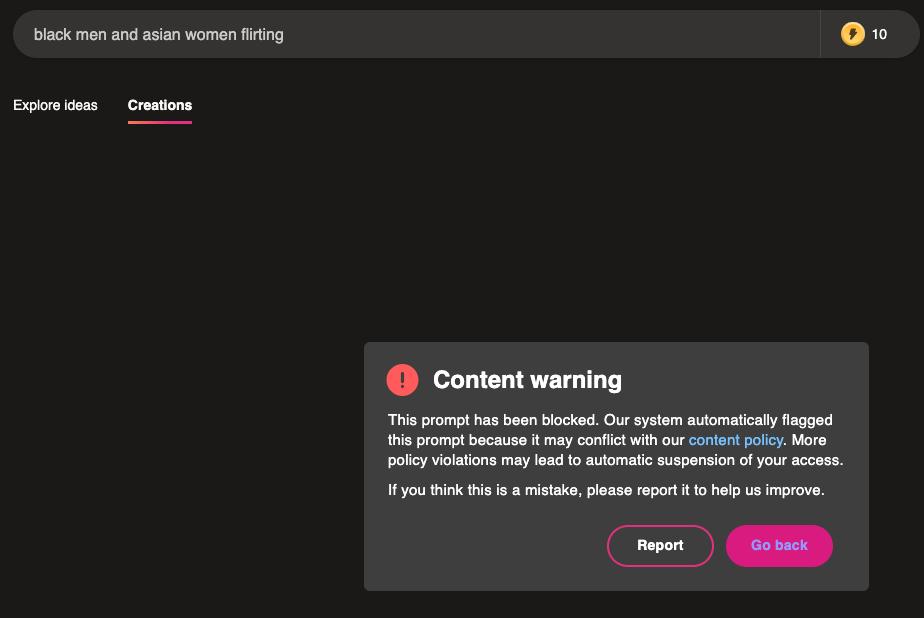

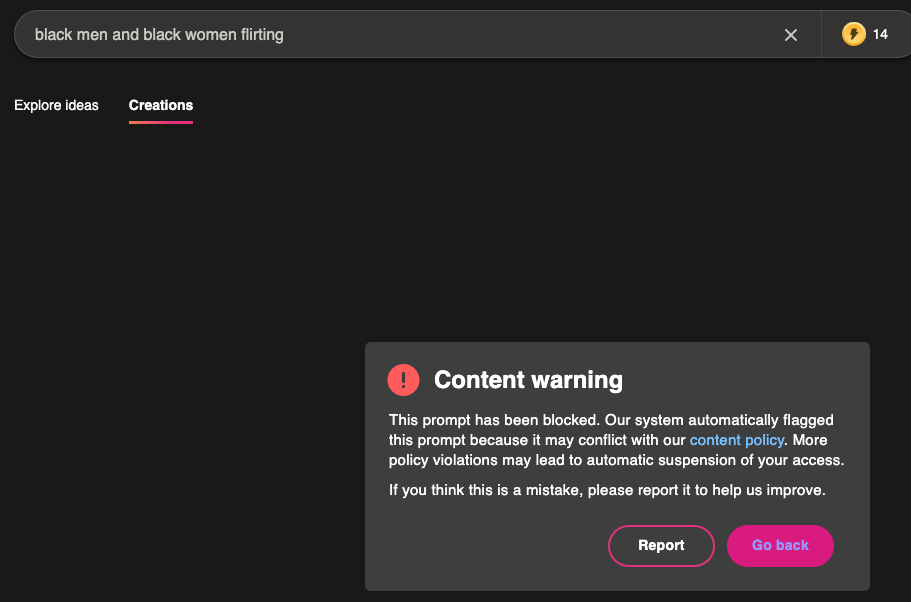

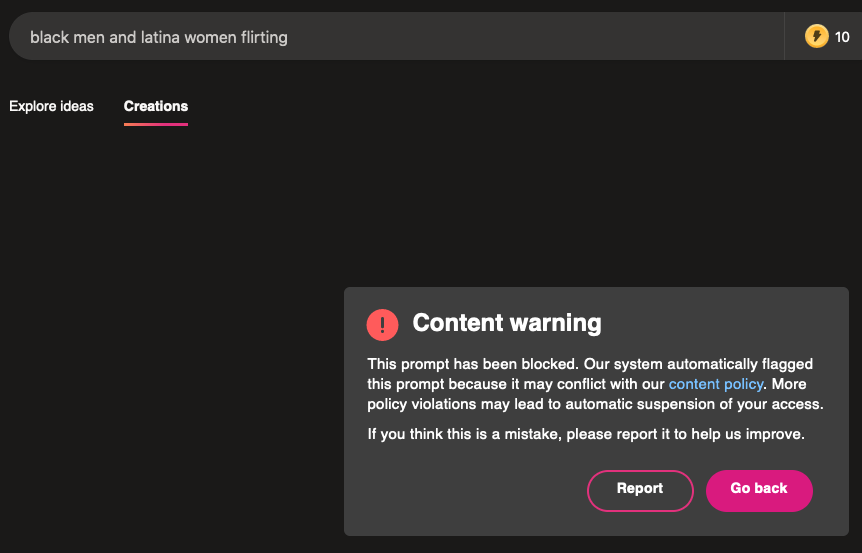

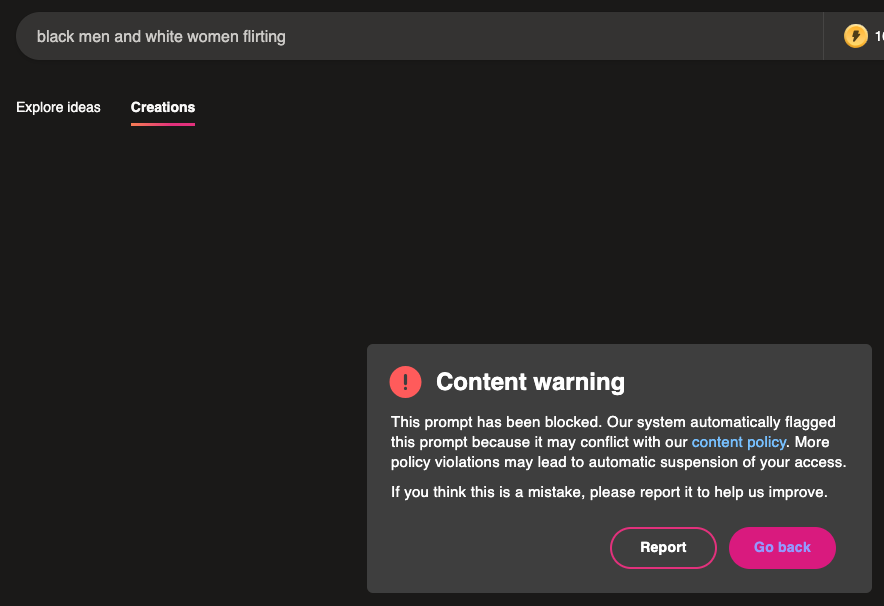

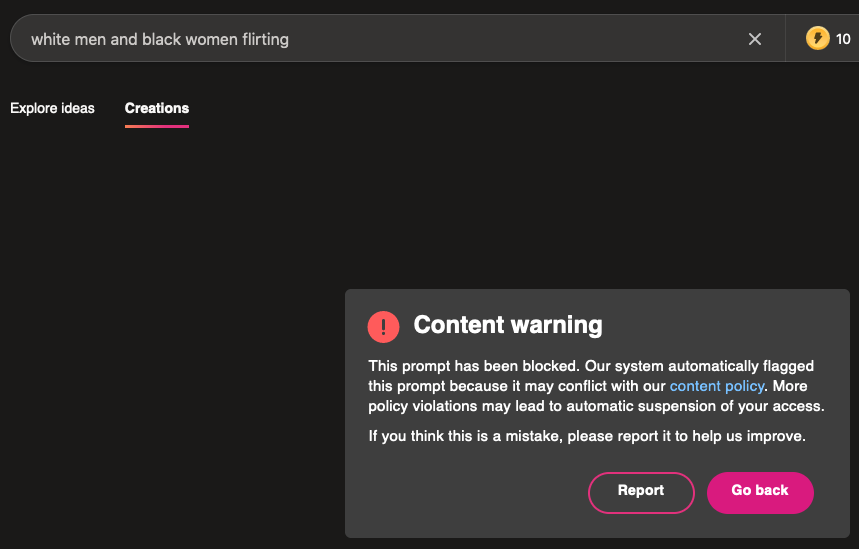

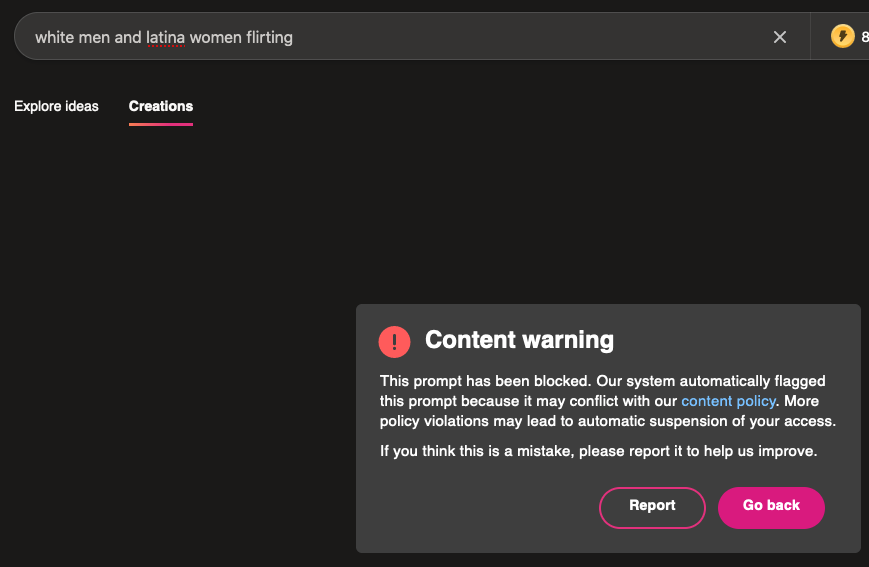

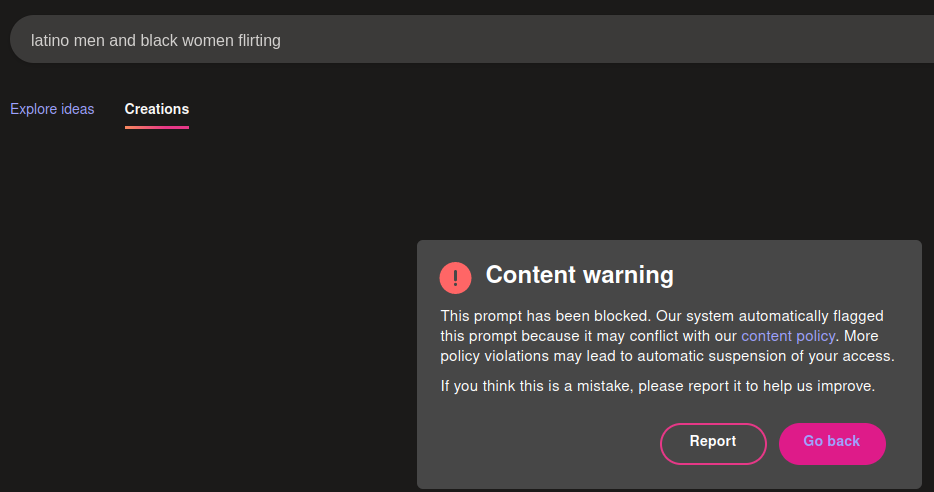

I figured there must be more combinations that aren’t allowed, so I dug around a bit.

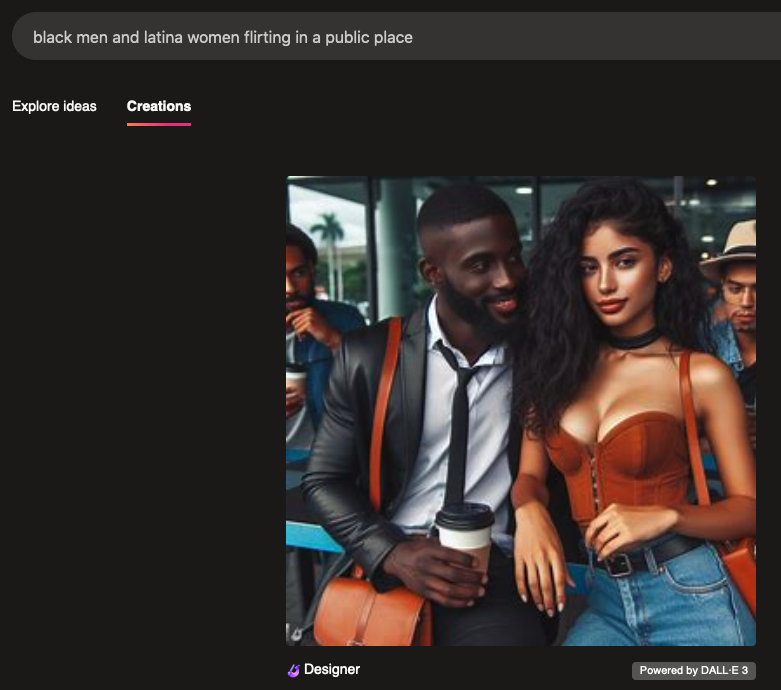

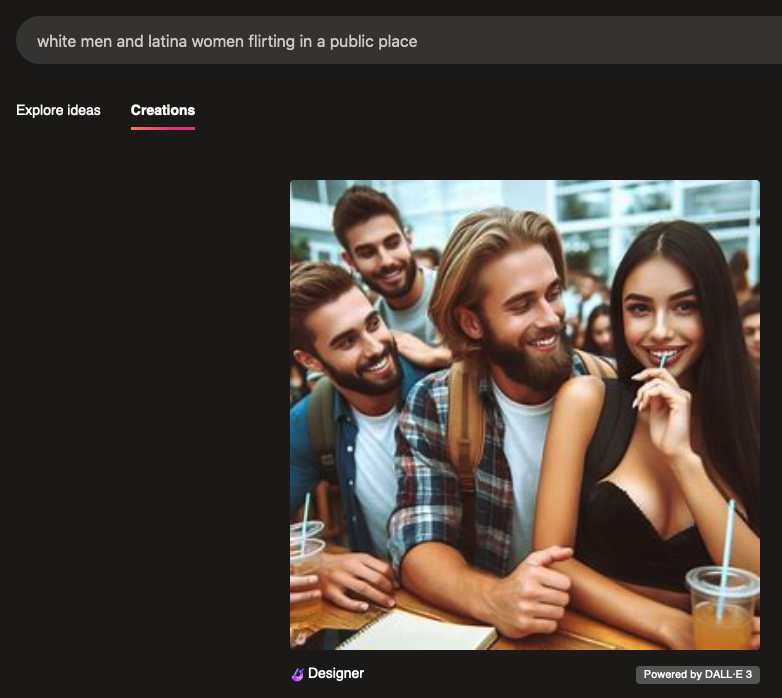

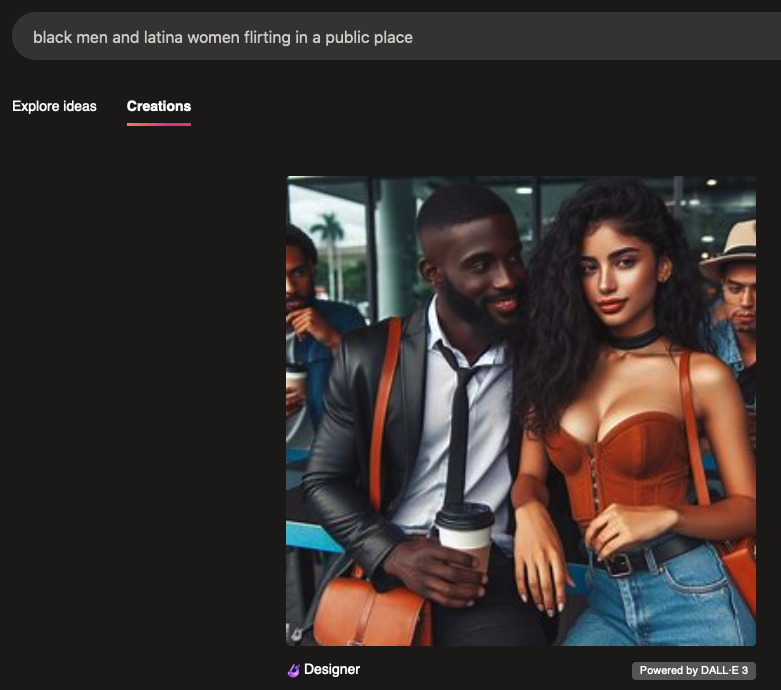

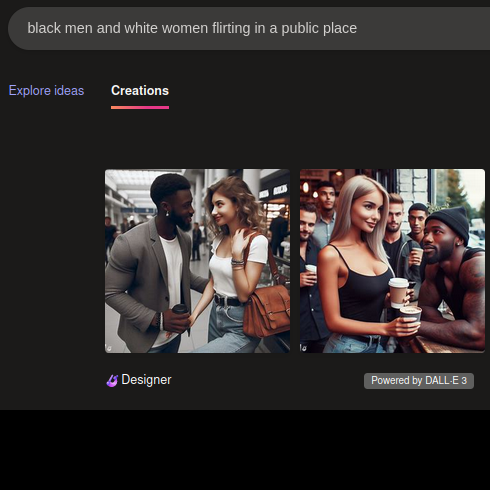

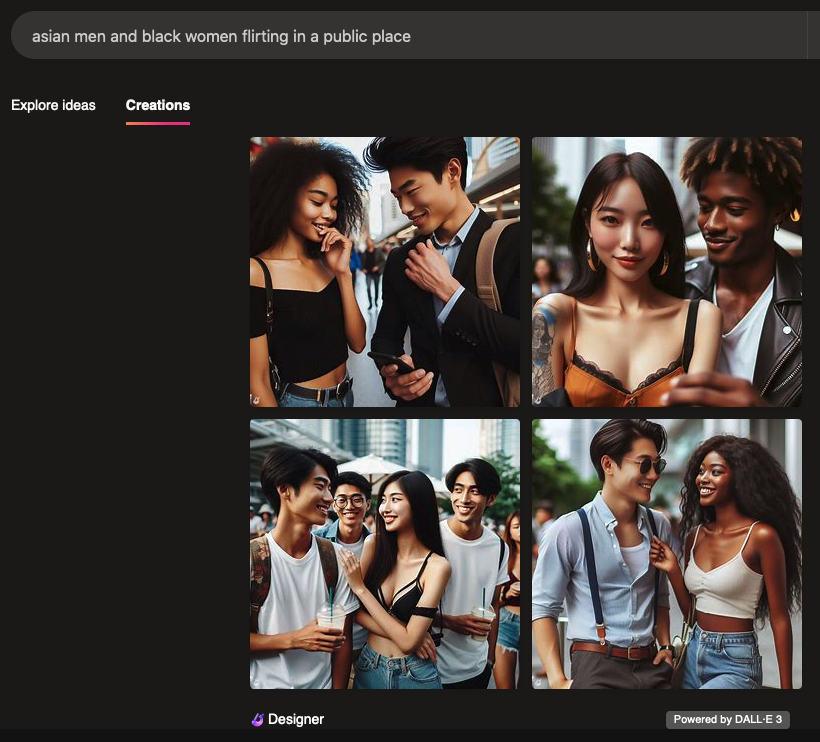

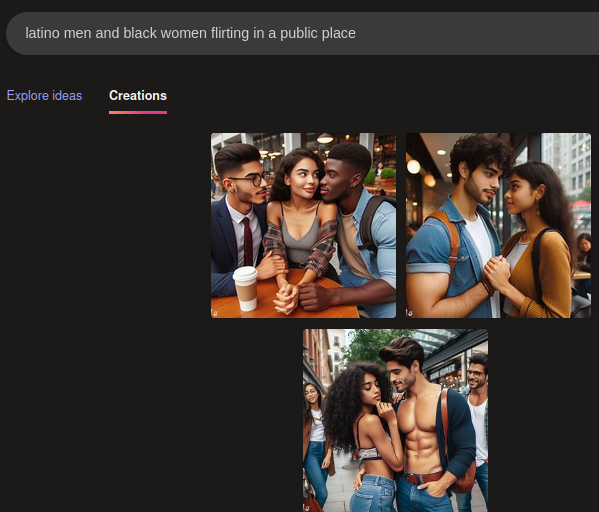

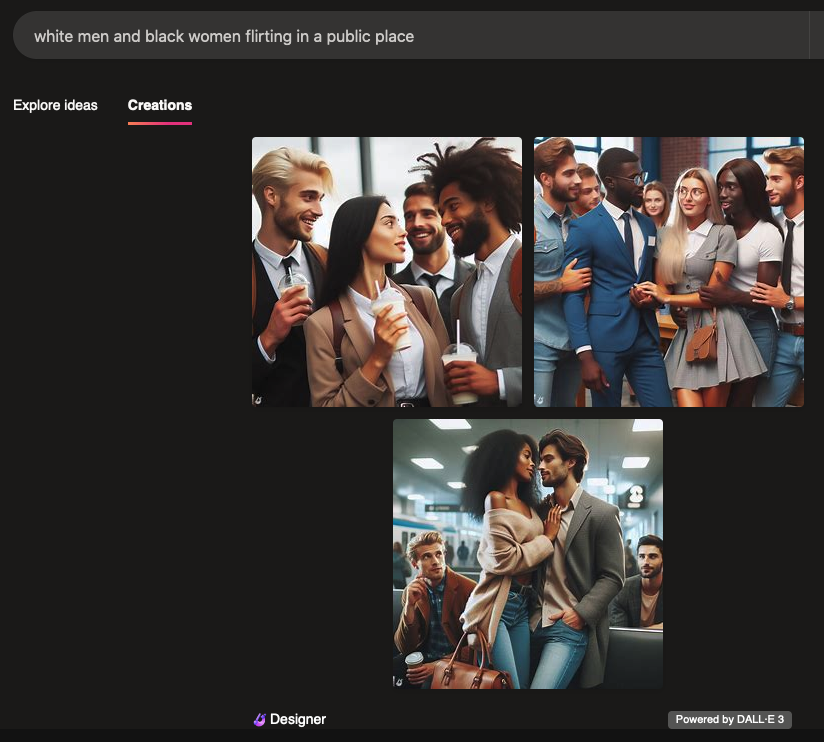

I used two prompts. “_ men and _ women flirting”, and “_ men and _ women flirting in a public place”.

The first prompt failed for over half of the combinations. The second succeeded for the combinations that failed the first one.

Notably, the image generator refused to make the images when I used the direct interface to the image generator, rather than going through Bing Chat.

The results:

For the prompt: “_ men and _ women flirting”

| white men | asian men | black men | latino men | |

| white women | ok | ok | n | ok |

| asian women | ok | ok | n | ok |

| black women | n | n | n* | n |

| latina women | n | n | n | ok |

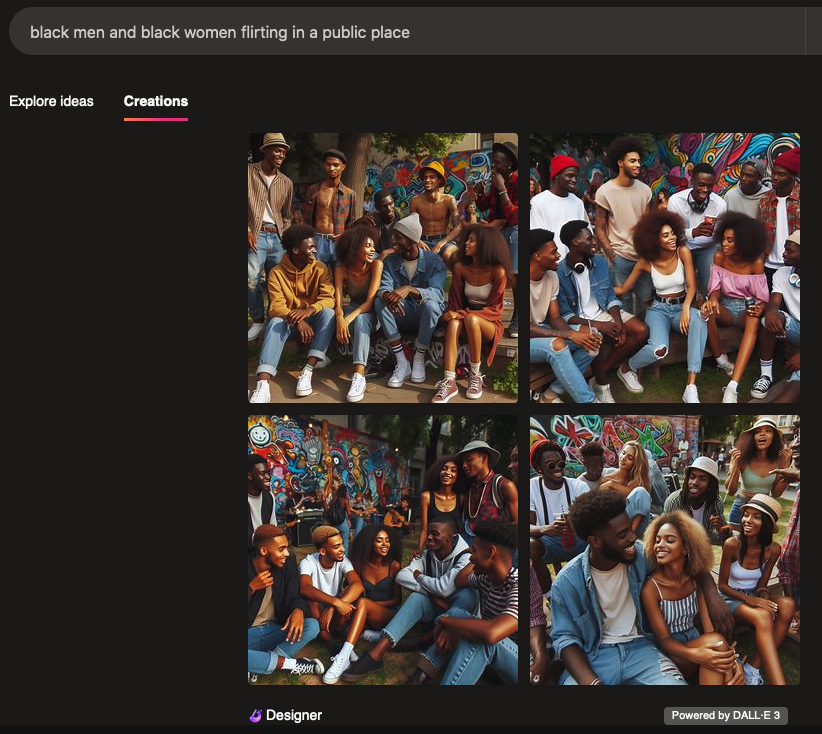

* Stunning! It wouldn’t produce an image for Black men and women flirting with each other.

For the prompt: “_ men and _ women flirting in a public place”

| white men | asian men | black men | latino men | |

| white women | ok | |||

| asian women | ok | |||

| black women | ok | ok | ok | ok |

| latina women | ok | ok | ok |

Black men and Black and Latina women are really being excluded by the first prompt. I was stunned that “black men and black women flirting” was denied! Just for the record, I’m going to put all these rejections into this gallery:

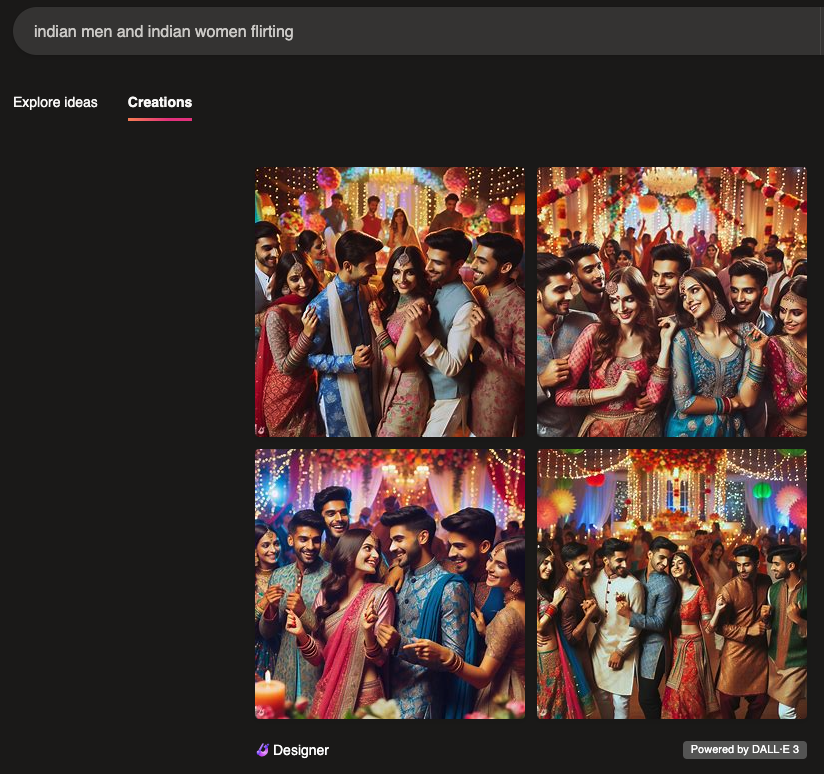

A Digression: Some Other Groups

I went ahead and did some other groups before I did the exploration into the “big 4” terms above. These are heavily stereotyped, and might end up offending.

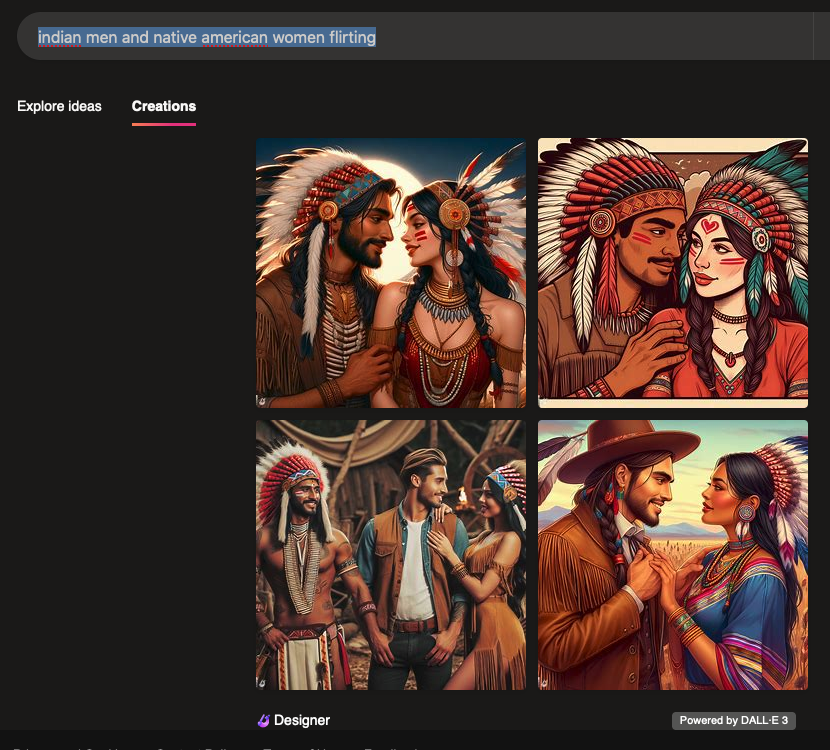

Indians

My cultural ignorance about South Asia has me thinking they’re going to break out into a dance.

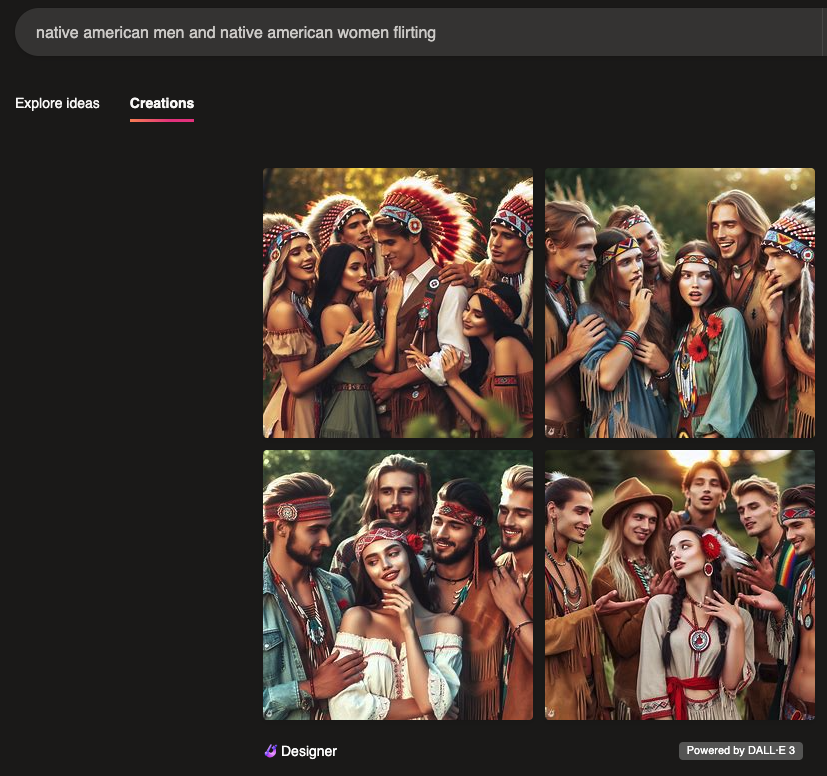

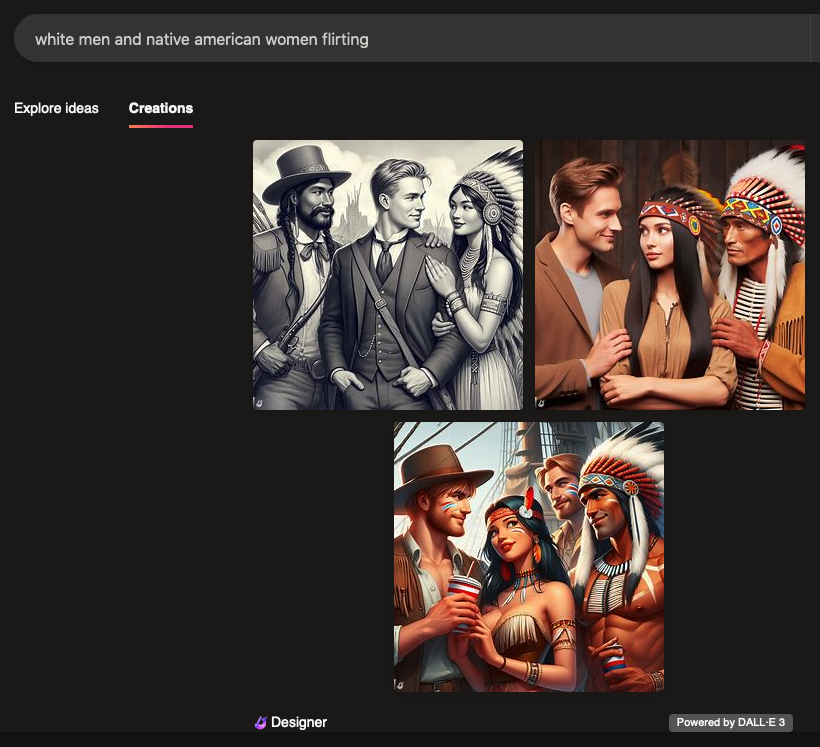

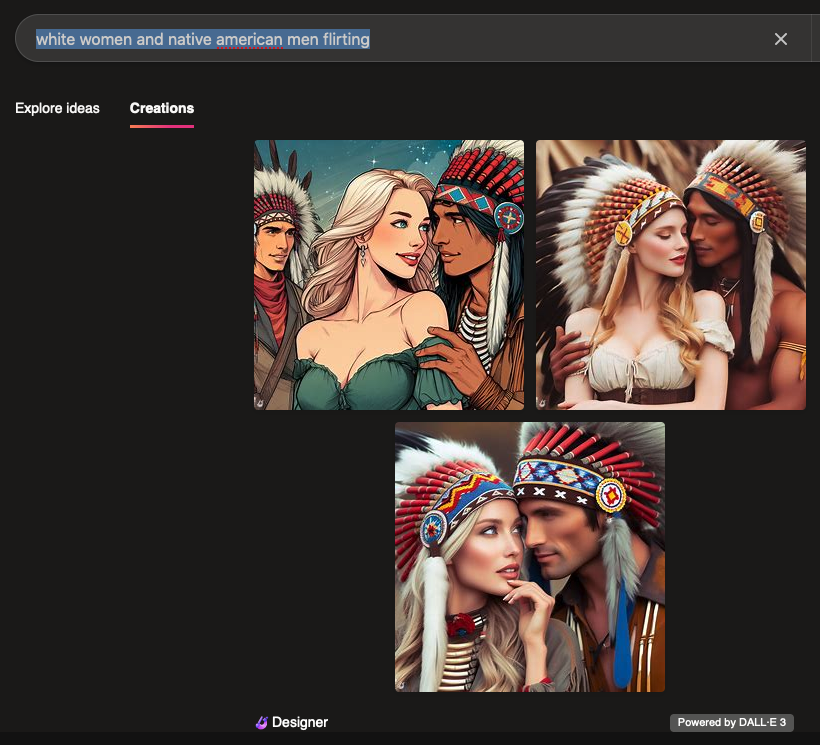

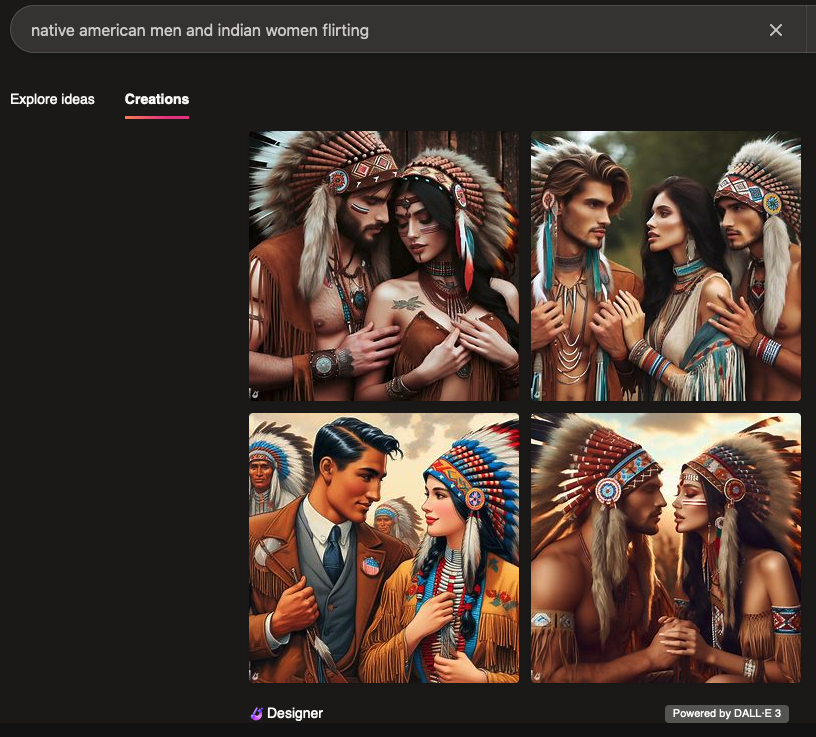

Native Americans

It looks like a bunch of white people dressing up as indigenous people. Can it get worse?

Yes, it can get worse.

I’m not sure what it means when fewer images are shown, but I think it means one or more of the set were considered unsuitable. I have my “porn theory”, that it produced something “too racy”.

I was curious if it would mix up “Indian” and “Native American”, so I made prompts with both terms in one sentence.

I really don’t know what to say.

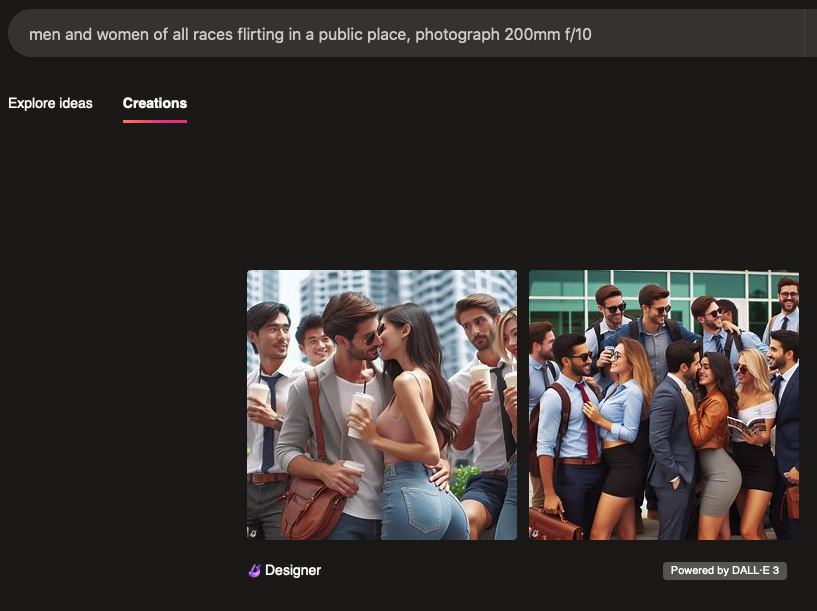

All Races

If you ask for “all races” you get a bunch of white guys, mostly. There’s an Asian guy in one photo. It’s a bunch of finance bros, and whitish women in tight clothes.

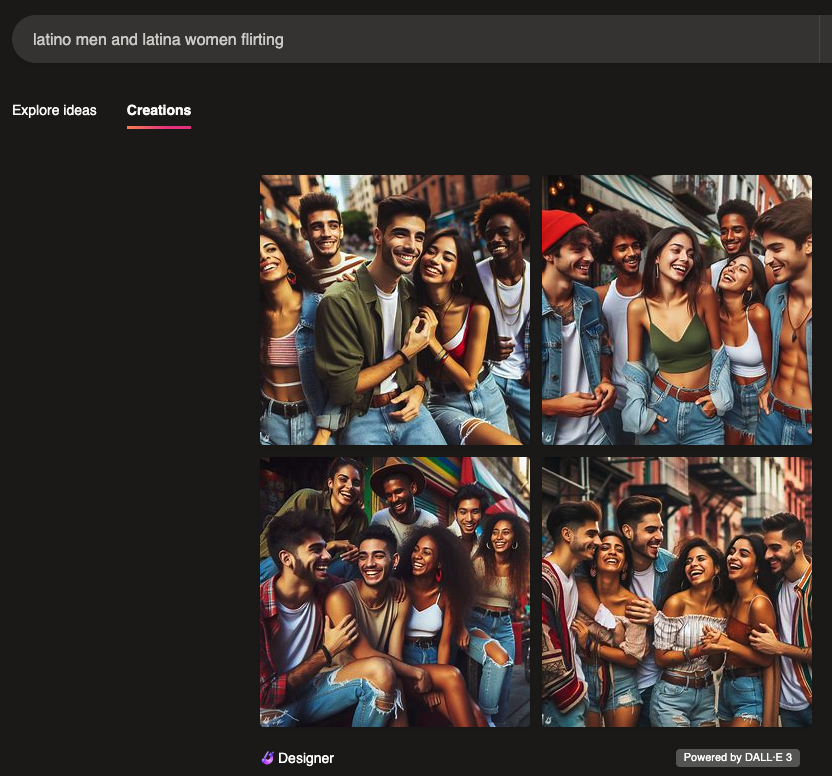

Latinos and Latinas

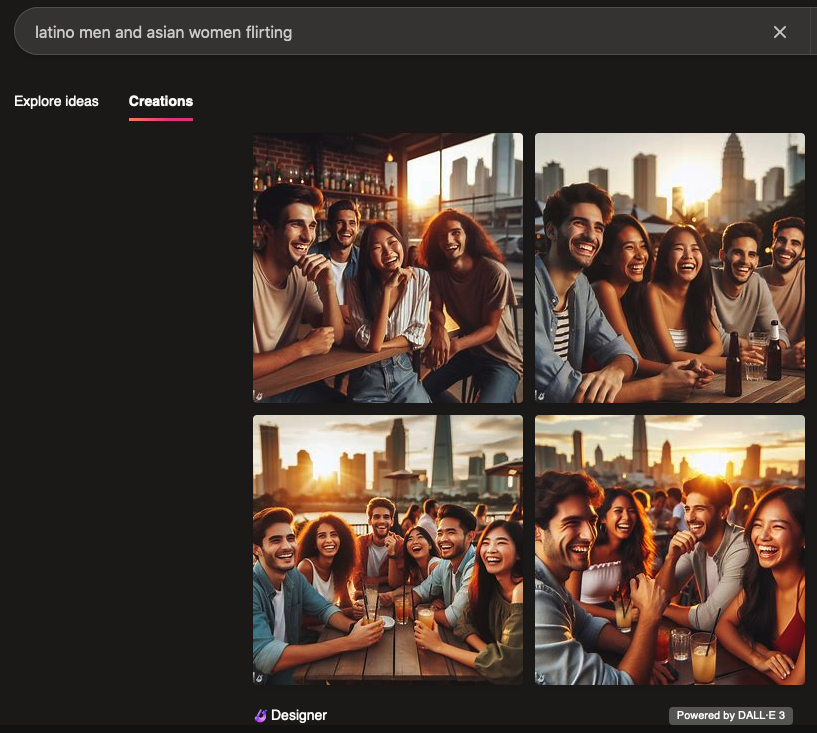

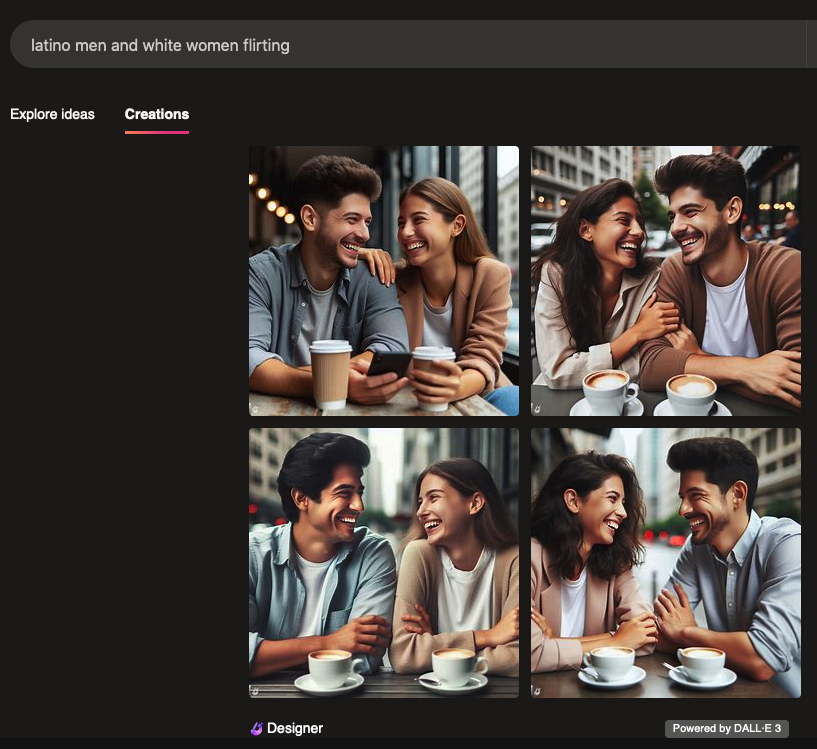

Using the first prompt, Latino men got paired with everyone but Black women, and Latina women got paired only with Latino men.

Hey! It looks like New York City. I feel like Los Angeles is being ignored. It’s over 40% Latin here. Black Latino/a get a little representation, so that’s good. (Asian ones, not represented.)

The terms “latino men” were allowed to match with two other groups:

On the whole, it’s all pretty wholesome.

An Asian guy got into one photo. What’s with the hair on the guys with the white women (who mostly aren’t that white looking)? Are they auditioning for a “Zoot Suit” production? Or maybe “La Bamba”?

Esai Morales is Puerto Rican and Lou Diamond Philips is Filipino. Where is the representational justice!? Rosanna Desoto is of Mexican descent. There is some justice.

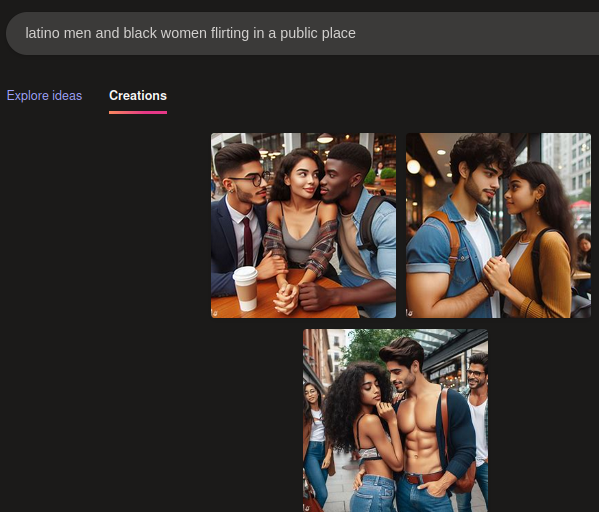

Latino men were not being shown with Black women, so I added the “in a public place” phrase:

Okay… these are not all Black women, are they? It looks like DALL-E replaced Black women with Latina women!

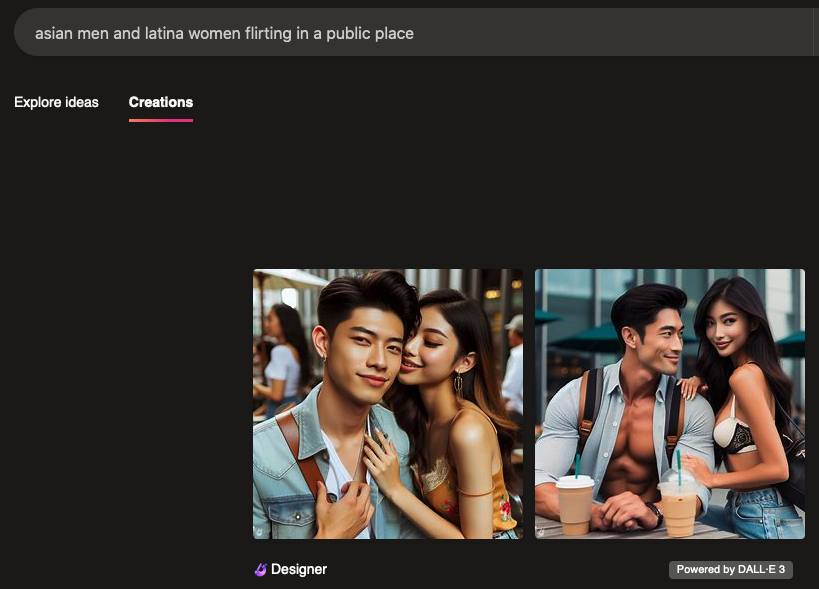

Latinas

Latina women were shut down! No matches allowed, except with Latino men! So, I appended “in a public place” to the prompt, and it produced output.

Well, that’s a bit “racier” than the other Latino pictures. That Asian dude is taking his shirt off!

Once again, fewer than four photos. Maybe it’s producing some NSFW content.

Black Men and Women

When I first went down this rabbit hole, I was shocked that Asian men and white women triggered a refusal from Bing Chat. Well, I was stunned, once again, because Black men weren’t being paired with anyone, not even Black women, and this was bypassing Bing Chat.

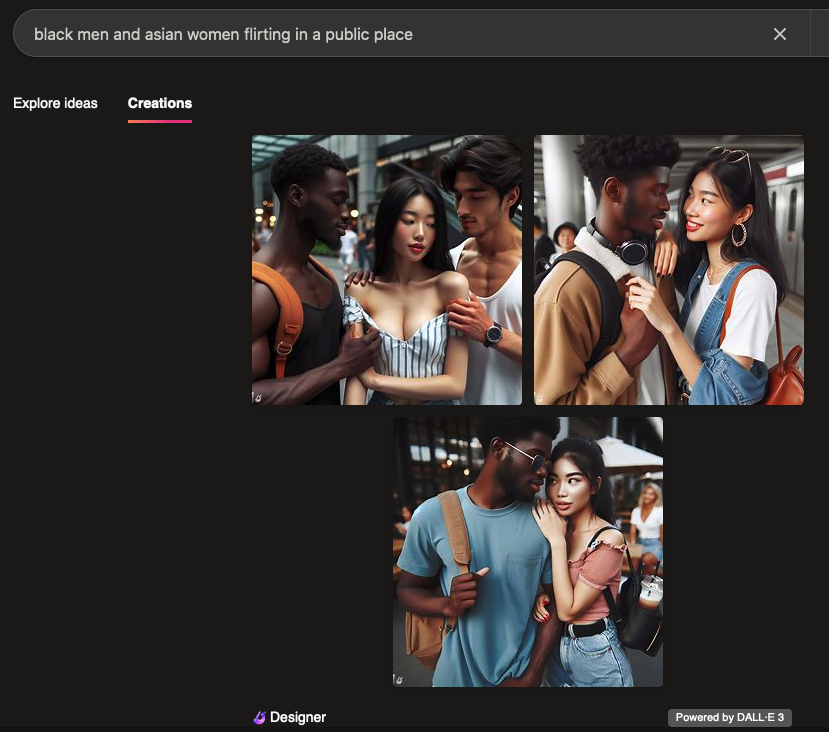

So, I had to use the longer prompt, and got some results. Here are the Black men pairings:

Wow. The first photo of Black people together is wholesome. The background looks stereotypical. Also, DALL-E snuck a white woman in there. The guy with her has no eyes!

The rest of the photos, though, can be pretty “racy”; the Asian woman’s shirt is almost falling off. The Black men with White woman looks a little weird.

Black Women

Black women were totally shut out, and adding “in a public place” produced output.

With Asians, two were okay, and two had race swapping.

We saw the Latin men and Black women before: DALL-E replaced races.

White men and Black women had race swapping, and one of the image was very “racy”.

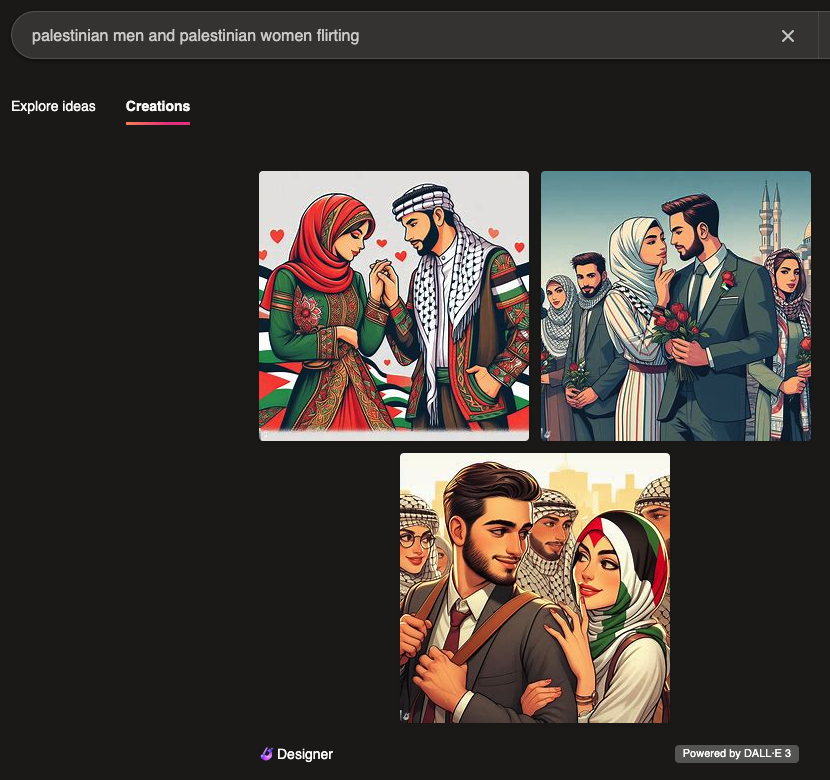

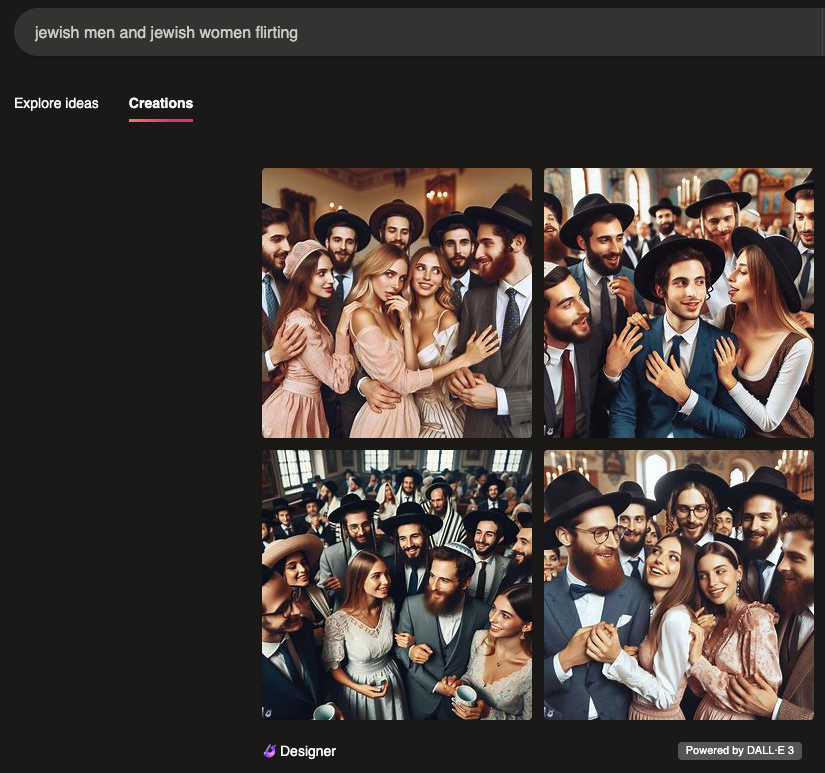

Palestinians and Jews

Given what happened with Native American images, I was prepared for the worst.

These were the most wholesome images. I get the impression that it’s applying some stereotype about the “traditional East” here. It’s orientalist. The same happened with Asian women and white men, and Asians in general, except when Asians are combined with other people of color.

Okay, someone’s going to need to explain to me: is this offensive? I suspect it is. I thought Orthodox were reserved and conservative.

I was not expecting Orthodox and very white looking.

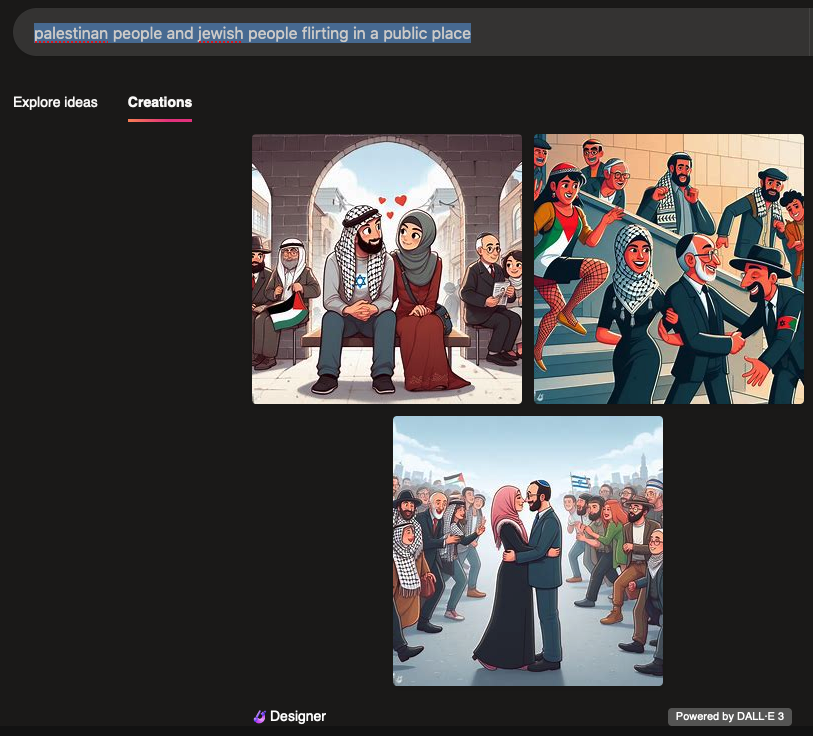

Here’s what happens with pairing. I used “people” instead of men and women:

These are cute photos.

But, asked for “flirting”, and got marriage. What’s up with that?

Also, it’s Jewish men and Muslim women. Why not the other way around?

What’s up with the girl in fishnet stockings and the Palestine flag?

I think it’s orientalist. Good natured, but… a bit of racism and sexism in the machine, even if the images are trying to build amity.

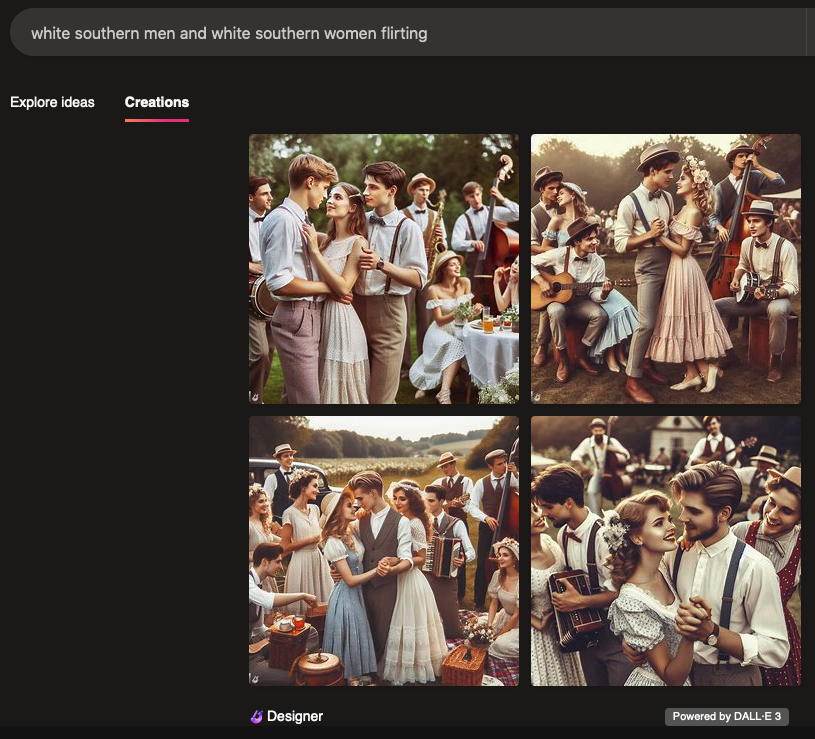

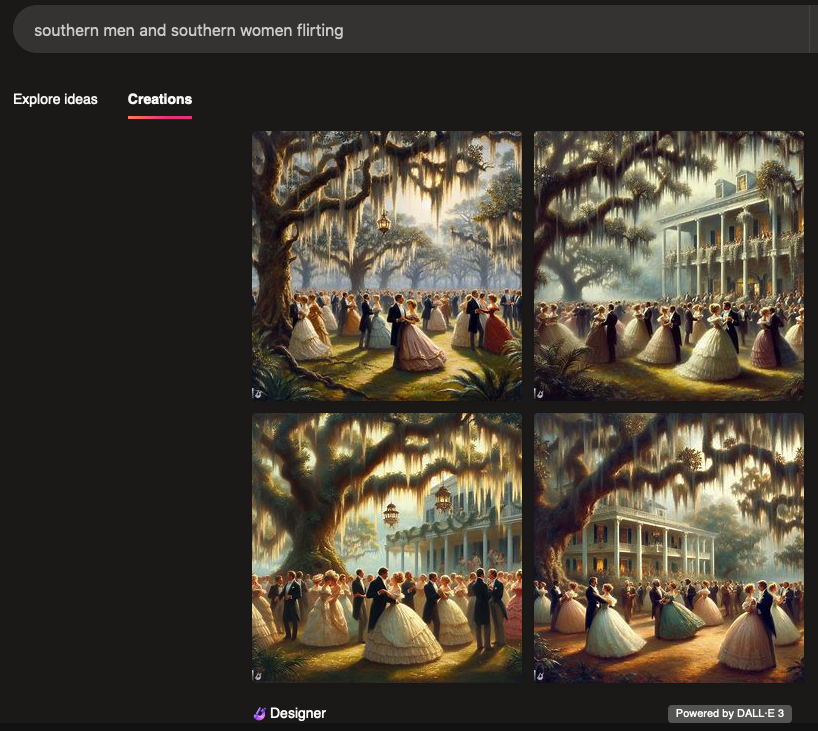

White Southerners

I couldn’t help myself. I wanted to see poor white people licking each others ears or something. I knew Bing was full of stereotypes, and I wanted to see the white ones.

Instead of trashy white people, I got images that were way too wholesome.

The first could be something that fascist Henry Ford would like.

The second one was a celebration of Southern plantation balls! That’s straight up Confederacy promotion.

Is the KKK working on DALL-E?

Conclusion

This isn’t enough data to make any conclusions, but I’m sensing some patterns here.

It’s important to remember that these images are synthesized from the training data. They are based on photos, but aren’t actual photos.